Introduction

Artificial Intelligence (AI) is no longer just a buzzword — it’s shaping industries, transforming businesses, and powering innovative solutions. But as AI systems become more complex, a critical challenge emerges: how can humans trust decisions made by machines? This is where Explainable AI (XAI) comes into play. For students and young professionals aspiring to work in AI, mastering XAI is a key skill that not only strengthens your technical portfolio but also builds credibility with stakeholders and AI experts.

What Is Explainable AI and Why It Matters

Explainable AI refers to methods and techniques that make AI models transparent and understandable to humans. Unlike traditional “black-box” AI models, XAI provides insights into how and why a model makes specific predictions.

This transparency is vital because:

- Businesses need to justify AI-driven decisions to clients, regulators, and team members.

- It helps data scientists and AI engineers debug, improve, and validate models.

- Stakeholders gain confidence in AI solutions, which is crucial for adoption in sensitive domains like healthcare, finance, and autonomous systems.

Joining a professional AI Training Institute in Yamuna Vihar can help students learn XAI concepts hands-on, using real-world datasets and tools.

Key Principles to Build Trust Through

Explainable AI

- TransparencyEnsure that your AI models are interpretable. Tools like SHAP (Shapley Additive Explanations) or LIME (Local Interpretable Model-agnostic Explanations) allow users to see how each feature influences the model’s predictions.

- Consistency

Reliable AI outputs build trust. Train your models rigorously and validate them across diverse datasets to demonstrate consistent performance. - Simplicity in Communication

Technical experts and non-technical stakeholders both need clarity. Present your AI findings in clear, simple visualizations that highlight reasoning behind predictions. - Ethical AI Practices

Consider fairness, bias, and ethical implications of your models. Transparent documentation of decisions ensures that AI solutions are responsible and trustworthy.

For hands-on experience and mentorship, enrolling in an AI Training Institute in Uttam Nagar provides exposure to real projects, practical case studies, and industry-standard tools — empowering students to implement XAI effectively.

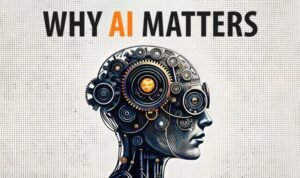

Tools and Techniques for Explainable AI

Some popular tools and frameworks that students and professionals can leverage include:

- SHAP and LIME: Analyze feature importance in complex models.

- InterpretML: A Python toolkit for model interpretation.

- AI Fairness 360: Ensures fairness and reduces bias in AI systems.

- Visualization Libraries: Matplotlib, Seaborn, and Plotly help communicate AI results visually.

By mastering these tools, students can not only understand models better but also demonstrate accountability to peers and clients — a skill highly valued in AI careers.

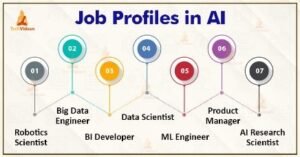

Career Benefits of Explainable AI Skills

Proficiency in XAI opens doors to high-demand roles:

- AI/ML Engineer

- Data Scientist

- Model Risk Analyst

- AI Consultant

- Research Scientist

Employers actively seek professionals who combine technical skills with the ability to explain AI decisions, making XAI expertise a career accelerator. Completing training at a reputed AI Training Institute in Yamuna Vihar or Uttam Nagar ensures that students gain the practical knowledge and confidence needed to excel in interviews and projects.

Final Thoughts

Explainable AI is no longer optional — it’s a career-defining skill for anyone entering the AI field. By learning how to build transparent, trustworthy AI models, students and young professionals can distinguish themselves in a competitive job market, contribute to ethical AI solutions, and gain the confidence of experts and stakeholders alike.

Start your journey today at a professional AI Training Institute in Yamuna Vihar or Uttam Nagar and turn your AI knowledge into trust, credibility, and a high-paying career. Visit us

Suggested Links: –

Oracle Database Administration